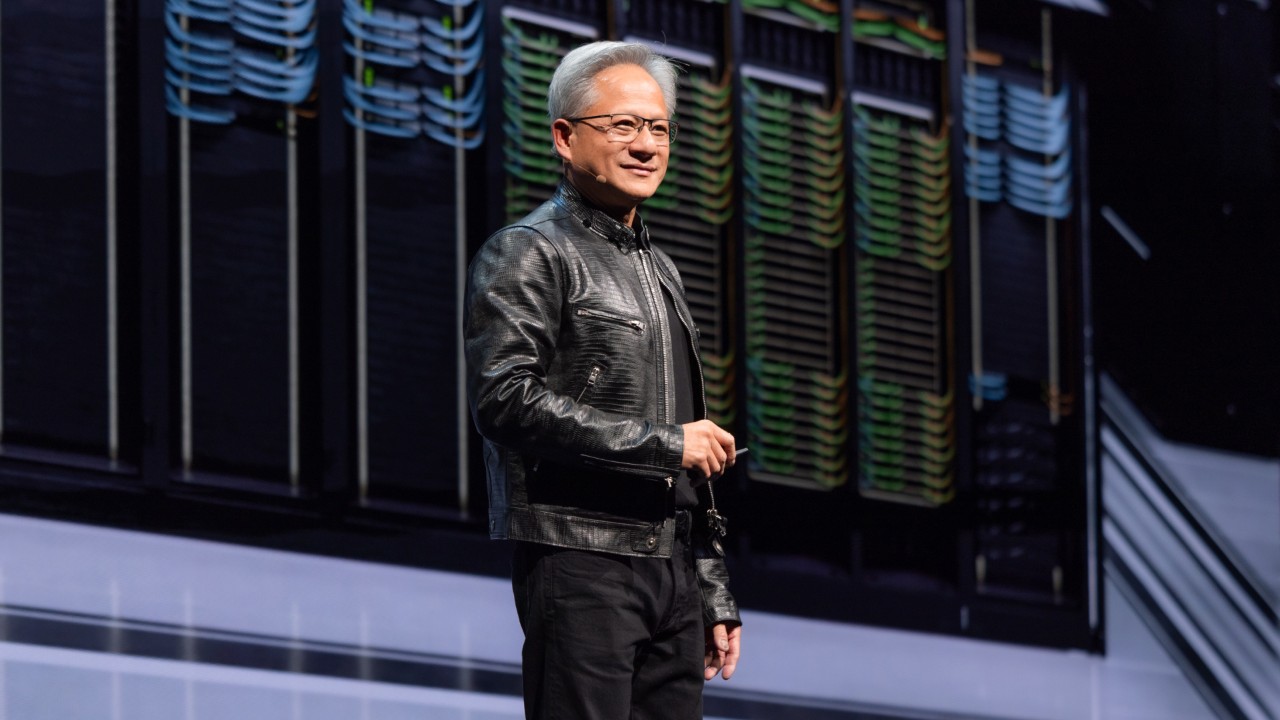

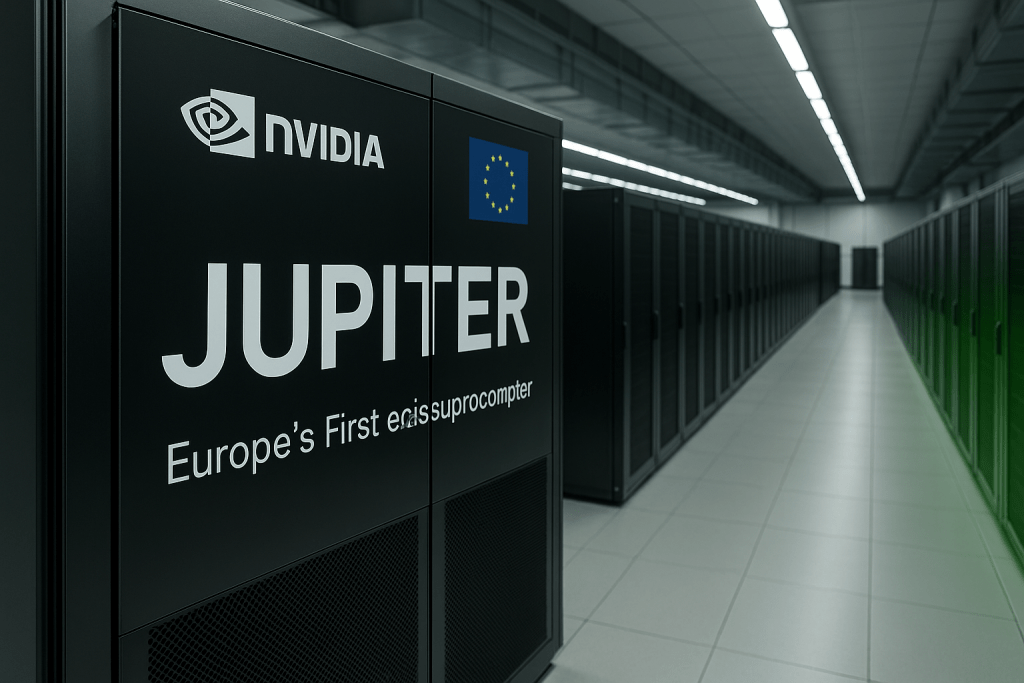

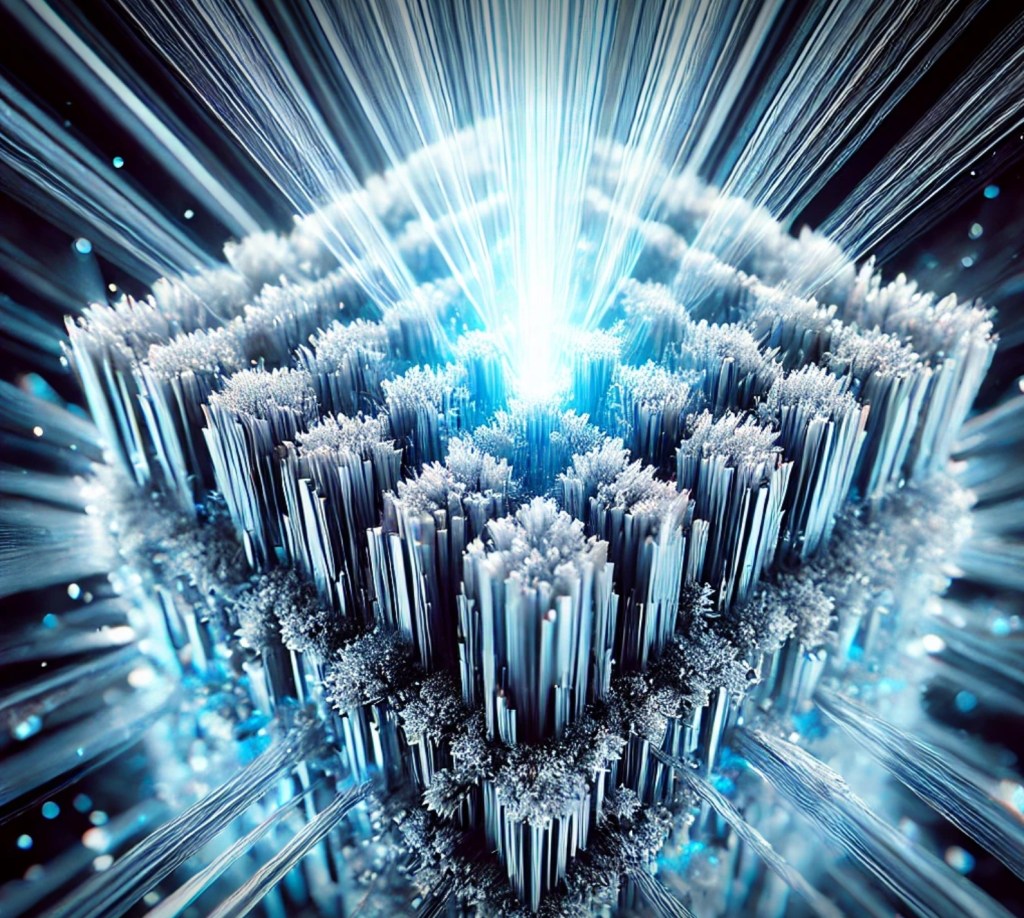

When it comes to computing power, NVIDIA has consistently demonstrated a clear evolutionary direction while maintaining its position as a leader in the semiconductor industry. Their recently revealed giant chip does not stray from this path either, furthermore, NVIDIA claims it is capable of singlehandedly processing the traffic of the global internet!

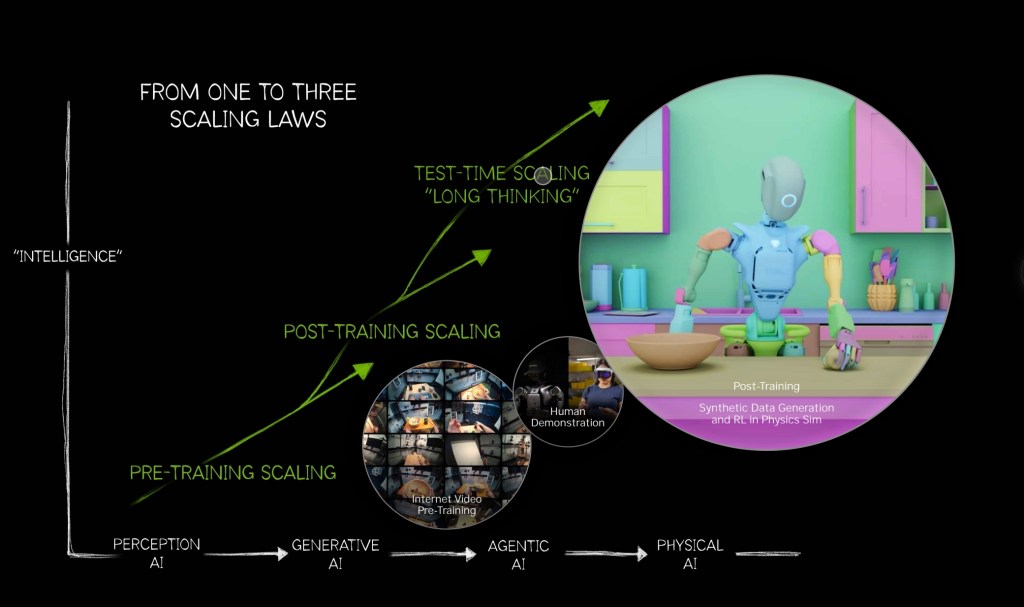

Since the introduction of the Blackwell architecture in NVIDIA‘s roadmap back in 2023, the company has been aiming to develop a system capable of training and running inference for trillion-plus parameter models. This includes GPT-scale Large Language Models (LLMs) as well as large-scale simulation and analytics applications. In early 2024, NVIDIA officially launched the GB200 NVL72, which has since undergone extensive testing. Multiple research teams have already demonstrated its potential throughout ambitious projects, such as the simulating of a 70 trillion-spin Ising system using Blackwell-based hardware.

Untitled © 2023 by NVIDIA is licensed under CC BY 4.0

Shortly after its reveal, industry leaders such as ASUS and HPE began shipping full raks. Building on the momentum and feedback from these large-scale deployements, NVIDIA accelerated development and by mid-2025, formally introduced the new generational GB300 as the next step of its roadmap. Dell Technologies, and CoreWeave, a leading AI hyperscaler, announced the delivery of the first NVIDIA GB300 NVL72 systems featuring roughly 50% enhanced performance compared to its predecessor, marking a significant milestone in AI computing. CoreWeave became the first cloud provider to deploy these systems, with plans to scale deployments globally throughout 2025, and by August had managed to incorporate it into their system.

But what really is this new gigantic superchip? The NVIDIA GB300 NVL72 is a full-rack scale AI supercomputer platform that was unveiled on July 2025. It’s build around the Blackwell Architecture which is a next generation GPU microarchitechture designed specifically by NVIDIA. The giant chip was designed to for large scale generative AI workloads, including training and real time AI inference for trillion-parameter models. The platform merges CPU and GPU innovations in a vertically integrated system that replaces prior generation Hopper-based platforms.

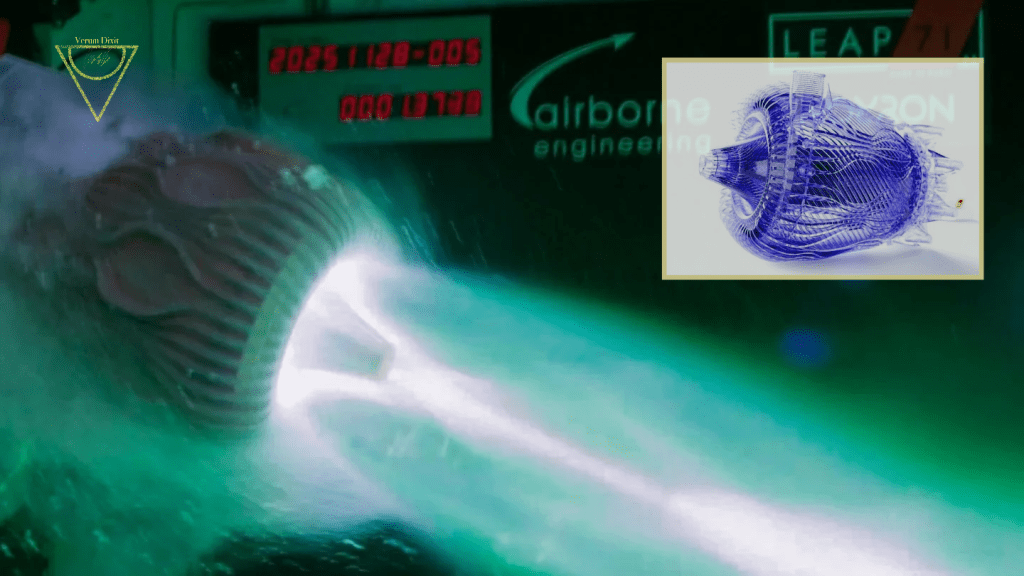

Untitled © 2023 by NVIDIA is licensed under CC BY 4.0

At the core of the GB300 are 36 Grace CPUs paired with 72 Blackwell Ultra GPUs packaged into 18 Grace-Blackwell superchips. Each GB300 superchip integrates two Grace CPUs and four Blackwell Ultra GPUs (six dies per superchip) all connected via fifth-generation NVLink Chip-to-chip interconnects. The racks 18 superchips are arranged across Compute trays and supported by NVLink to switch trays, delivering roughly about 130TB/s of aggregate GPU-to-GPU bandwidth across the rack These interconnects enable the entire rack to operate as a single GPU-like system supporting unified memory access and interprocess GPU communication with extremely low latency.

The system offers up to 288 TB of HBM3e memory (significantly more than the GB200) allowing it to achieve new heights in both compute density and memory thoughput. According to NVIDIA, the GB300 NVL72 delivers up to 50x higher output for reasoning model inference compared to Hopper-based systems (such as HGX H100), particularly for trillion parameter mixture of experts models. It also provides 4x times faster training speeds and latency for real time inference at as low as 50 milliseconds on large models.

Since 2024, NVIDIA has secured multiple collaboration contracts with leading hardware providers, including Google, Supermicro,ASUS , HPE, Ingrasys and other major contributors and institutions to bring the GB300 to market, and test the chips abilities integrated within OCP ( Open Compute Project )- compliant racks.

Yet, the GB300 is still only a stepping stone toward the emerging NVLink Fusion standard, which will enable NVIDIA GPUs to connect directly with third-party CPUs from companies such as Qualcomm and MediaTek. This advancement will open the door to more flexible server designs, extending beyond NVIDIA‘s Grace-only architecture.

To keep pace with the rapidly evolving field of deep-space computing and next generation AI harware, consider subscribing to our Newsletter, or explore the latest research updates wia NVIDIA’s Roadmap, the MIT Technology Review or the IEEE Xplore Digital Library for cutting edge publications on advanced AI supercomputing platforms.

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.